For years, voice automation was synonymous with friction: rigid IVRs, endless menus, and customers hanging up before reaching an answer. That experience left a mark and explains why many organizations still hesitate when AI voice agents are mentioned.

Today, the technology is different. AI voice agents are already operating in real environments, at scale, with measurable impact. Even so, they continue to fail when implemented as just another layer of technology, without revisiting processes or objectives. In those cases, it’s not AI that disappoints — it’s the implementation decision.

To understand when an AI voice agent shifts from being a promise to becoming a smart business decision, it’s worth examining what large-scale organizations are doing: what problem they solved, how they designed it, and why it worked.

Case Study: Erica, Bank of America

One of the clearest global benchmarks in conversational agents — including voice — is Erica, Bank of America’s virtual assistant, natively integrated into its digital ecosystem.

Erica is more than a channel. It is an operational layer that combines voice and text to resolve high-volume, low-friction interactions within daily banking experiences.

Its core capabilities include:

- Balance and transaction inquiries

- Explanations of charges and fees

- Proactive alerts on spending patterns

- Payment and due date reminders

According to official company data, customer digital interactions surpassed 26 billion in 2024, with Erica serving as one of the primary recurring contact points within that volume. This sustained use reduced pressure on traditional contact centers and shifted millions of routine inquiries to automated channels — without harming customer experience.

However, the main lesson from Erica is not scale — it is design. The assistant does not wait for the customer to ask a question. It anticipates needs, flags anomalies, and supports everyday decisions. At that point, it stops being reactive support and becomes a financial co-pilot.

This shift — from responding to anticipating — explains why the model works.

It combines five key components:

1. Natural Language Processing (NLP)

Erica interprets spoken and written queries using advanced language understanding models. It does not respond based on keywords but on intent, allowing it to manage variations, context, and conversational continuity.

2. Conversational Orchestration

AI determines what to do with each interaction: respond directly, request additional information, execute an action, or escalate to a human. Not all conversations follow the same path — and that is intentional.

3. Deep Integration with Banking Systems

Erica connects to Bank of America’s internal systems, enabling real-time balance checks, transaction identification, personalized alerts, and reminders. Without integration, there is no value. The AI does not simulate answers — it operates on live data.

4. Analytics and Pattern Detection

The assistant analyzes customer financial behavior to detect anomalies or relevant events, enabling proactive features such as unusual spending alerts or advance reminders.

5. Security, Control, and Governance

The entire system operates under strict security, authentication, and regulatory compliance standards. The AI functions within defined boundaries: what it can do, how far it can go, and when it must escalate to a human agent.

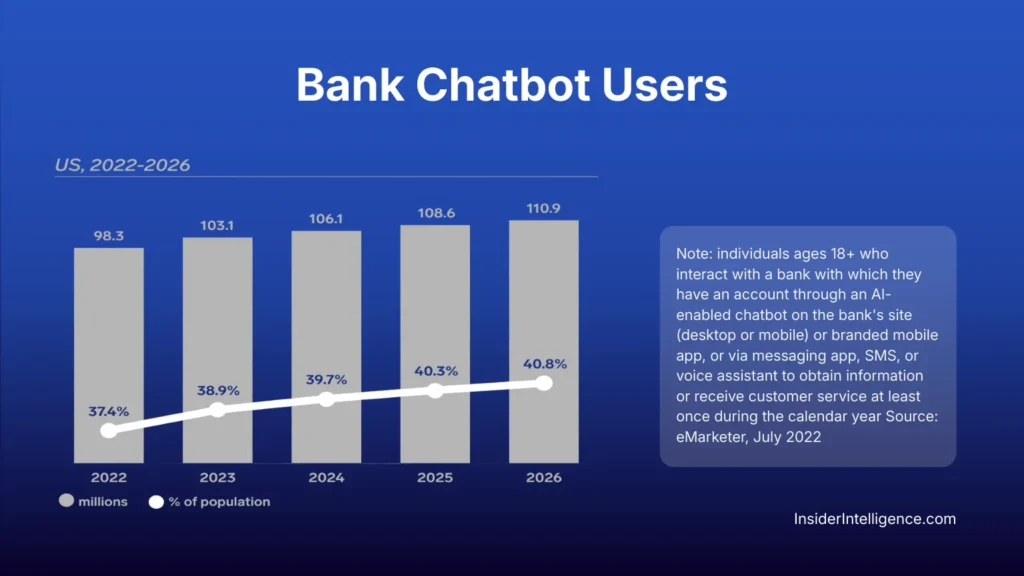

This is not an isolated case. According to eMarketer data, chatbot and virtual assistant usage continues to grow steadily in the United States, driven not only by Bank of America but also by institutions such as Wells Fargo and Truist, which have recently implemented AI-based financial service solutions.

Sustained growth in banking chatbot usage confirms that adoption is not temporary or technology-driven alone — it reflects a shift in customer behavior. In the U.S., more than 110 million people will interact with automated banking assistants by 2026, representing over 40% of the population.

This level of penetration indicates that AI-driven conversation is already part of the everyday financial journey.

In that context, voice and chat agents stop being an optional innovation and become a basic customer support infrastructure, as long as they are well integrated and designed as a complement to the human team.

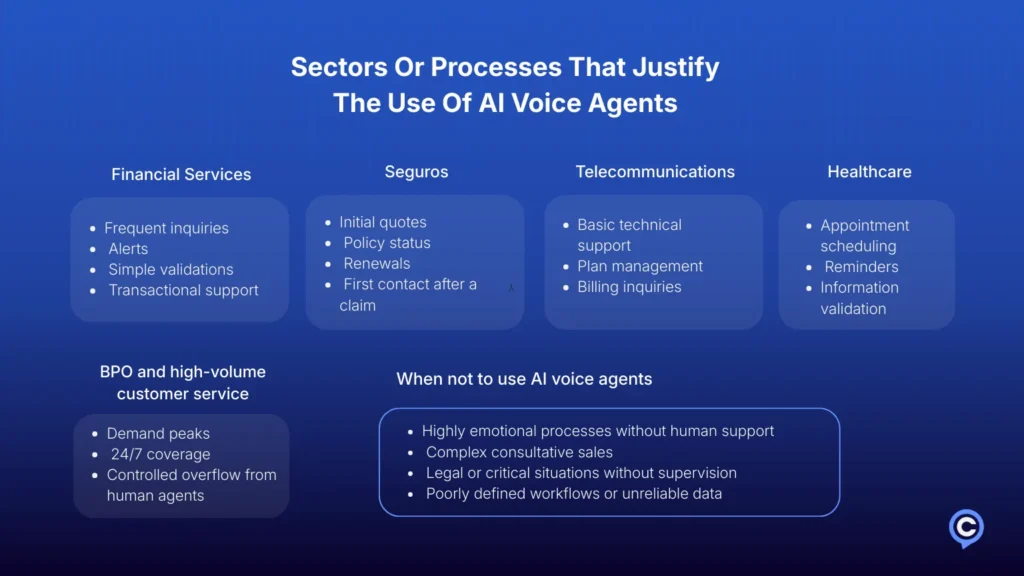

When Do AI Voice Agents Work Best?

AI voice agents do not succeed because of industry — they succeed because of process type. They make sense where there is volume, repetition, clear rules, and the need for immediate response.

In those scenarios, voice reduces friction, accelerates resolution, and frees human capacity for higher-value tasks. Where those conditions are absent, automation often fails.

Hybrid Models, Not Extremes

Successful implementations are not “all AI” or “all human.” They are well-designed hybrid models where each component does what it does best. AI handles volume, repetition, and speed. Humans intervene when context, exception, or emotional load appears.

Voice does not replace channels. It integrates as another layer within the conversational ecosystem, connected to data, rules, and teams — improving both experience and operations.

In properly orchestrated hybrid environments, AI manages most repetitive interactions, freeing human teams for complex cases. Gartner projects that by 2025, 80% of customer service interactions will be managed by AI, demonstrating automation’s potential when paired with human oversight.

The common mistake is automating for the sake of automation. The real strategic advantage lies in designing delegation: what AI resolves, how far it goes, under what rules, and when it escalates.

Are you evaluating the implementation of AI voice agents? At ChatCenter Service, we design these solutions starting from process architecture.